When Index Condition Pushdown is used, you cannot trust rows examined as reported by the slow query log (nor in some Performance Schema tables) and you cannot trust the global status Innodb_rows_read (nor the InnoDB Metrics dml_reads). These can lead to an incorrect query complexity analysis, to a mistaken estimation of the total load of a MySQL server, and to a lot of wasted time. As we go through the details in this post, we will understand why a SELECT * is sometimes faster than only selecting the columns from an index (we would normally expect the opposite) and we will visit inner workings of the MySQL Storage Engine Architecture. But first, let's start by exposing the problem.

Update 2023-06-27: I published another post about rows examined not trustworthy: Rows Examined Blindspot when Looking for non-Existing Data.

The Rows Examined Problem

A few months ago, I was trying to understand a query from the slow query log. This query was running for more than our slow query threshold, but only examining a few rows. In the environment I am working with (MySQL running in Kubernetes and hosted in AWS), this is usually caused either by a very slow read from a degraded or overloaded EBS volume, by an InnoDB micro-stall waiting for a free page (I realize this is a little cryptic, I have to write about this one day; update 2023-05-03: I am updating this post a little late, I wrote about this on 2022-11-22 in my post Tail Latencies in Percona Server because of InnoDB Stalls on Empty Free List), or by Kubernetes / cgroup CPU throttling. After some investigations, I was able to rule out all of these three conditions, so I looked again at the query and something struck me.

This query should examine much more rows !

Below is a table similar to the one that was queried, a count to give us a rows examined expectation (the dataset is generated with the scripts in annex #1), and a query similar to the one I was troubleshooting. By looking at the slow query log, we see that this query is retuning two rows and also examining two rows, but this is missleading.

# The table structure. ./use test_jfg <<< "SHOW CREATE TABLE t\G" *************************** 1. row *************************** Table: t Create Table: CREATE TABLE `t` ( `id` int NOT NULL AUTO_INCREMENT, `v1` int NOT NULL, `v2` int NOT NULL, `v3` int NOT NULL, `v4` int NOT NULL, PRIMARY KEY (`id`), KEY `v1_to_v3` (`v1`,`v2`,`v3`) ) ENGINE=InnoDB AUTO_INCREMENT=100001 DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci # The row count for the first part of the where-clause. ./use test_jfg -e "SELECT COUNT(*) as count_v1_eq_0 FROM t WHERE v1 = 0" +---------------+ | count_v1_eq_0 | +---------------+ | 10149 | +---------------+ # The query, with truncating the slow log table before, and querying it after. cols="sql_text, query_time, rows_sent, rows_examined"; \ ./use mysql <<< "TRUNCATE slow_log"; \ ./use test_jfg <<< "SELECT * FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ ./use mysql <<< "SELECT ${cols} FROM slow_log WHERE sql_text LIKE 'SELECT%'\G" *************************** 1. row *************************** sql_text: SELECT * FROM test_jfg.t WHERE v1 = 0 AND v3 = 10 query_time: 00:00:00.002387 rows_sent: 2 rows_examined: 2 <<<===--- This is much lower than what we expect.

This query only "examining" two rows is very weird, because it should examine all the 10,149 rows matching v1 = 0. When faced with something I do not understand, I try to simplify things. In this case, I replaced the * with only columns from the index (covering index query). This gave me a rows examined that I was expecting, but kept me puzzled.

# Covering index query examining the expected number of rows. cols="sql_text, query_time, rows_sent, rows_examined"; \ ./use mysql <<< "TRUNCATE slow_log"; \ ./use test_jfg <<< "SELECT id, v2 FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ ./use mysql <<< "SELECT $cols FROM slow_log WHERE sql_text LIKE 'SELECT%'\G" *************************** 1. row *************************** sql_text: SELECT id, v2 FROM test_jfg.t WHERE v1 = 0 AND v3 = 10 query_time: 00:00:00.003634 rows_sent: 2 rows_examined: 10149 <<<===--- This is what I was expecting.

Also, while scratching my head on this, I saw that the SELECT * query is faster than the covering index query (timings below). This is surprising because the covering index query has less work to do than the SELECT * query.

# Timing running the SELECT * a few times. time yes "SELECT * FROM t WHERE v1 = 0 AND v3 = 10;" | head -n 1000 | ./use test_jfg > /dev/null real 0m2.303s user 0m0.031s sys 0m0.025s # Timing running the covering index SELECT a few times. time yes "SELECT id, v2 FROM t WHERE v1 = 0 AND v3 = 10;" | head -n 1000 | ./use test_jfg > /dev/null real 0m4.326s user 0m0.063s sys 0m0.020s

With these observations, I opened Bug#104659: Select * reporting less rows_examined than only fields in an index. Øystein Grøvlen (MySQL Bugs, LinkedIn, Twitter, Blog), a well-known optimizer expert, brought my attention on Index Condition Pushdown and its impact, and everything became clear.

As shown in annex #2, this also affects the Performance Schema tables events_statements_summary_by_digest and table_io_waits_summary_by_table, the InnoDB Metrics dml_reads and the global status Innodb_rows_read.

Before going into more details about the cause of this, let's see why this is a problem. The number of rows examined by a query is an important metric for query optimization. As an example, a query returning 2 rows and examining 20,000 rows is probably worth optimizing, but a query returning 2 rows and examining 2 rows looks completely fine. Also, the total number of rows read by MySQL is an important metric to understand the load of a MySQL server. With these bad reporting of rows examined, we can lose a lot of time before seeing - if we even get to this observation - that adding an index would make the query above much more efficient (as shown in annex #3, adding the right index makes this query 10 times faster). Also, a bad reporting of rows read by InnoDB makes it hard to correlate an increase in CPU consumption with an increase in rows read.

The bad reporting of rows examined

introduces a blind spot in MySQL,

making query optimization more complicated

and server load harder to assess

To understand where this bad reporting is coming from, we have to dive in the Index Condition Pushdown optimization.

The Index Condition Pushdown Optimization

Index Condition Pushdown (ICP) is an optimization introduced in MySQL 5.6 (WL#5639: Enable ICP optimizer feature). In the Index Condition Pushdown Optimization section of the MySQL Reference Manual we can read:

ICP can reduce the number of times the storage engine must access the base table and the number of times the MySQL server must access the storage engine.

To understand the quote above, we must talk about the MySQL Storage Engine Architecture.

The Storage Engine Architecture

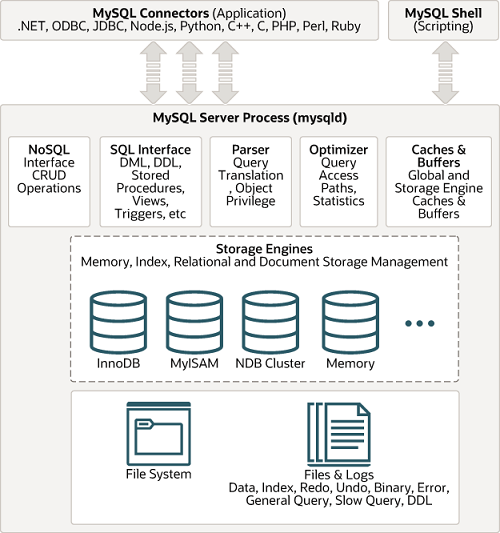

The image below is from the section Overview of MySQL Storage Engine Architecture of the MySQL Reference Manual. In this image, we see the whole MySQL Server [Process], with the Storage Engine sub-module. As shown below, there are many storage engine implementations including InnoDB and MyISAM, and there are others not listed below like BlackHole and MyRocks.

For having the possibility of using different storage engines and implementing new ones, a well define interface was implemented in MySQL 5.1. This interface is known as the Pluggable Storage Engine Architecture, previously called the Handler Interface. Describing this interface in detail is beyond the scope of this post, more can be read about this in the section Writing a Custom Storage Engine of the MySQL Internal Manual, and it is possible to play with part of this interface using the HANDLER Statement.

Back at the quote from the ICP documentation above, in it we can read that ICP can reduce [...] the number of times the MySQL server must access the storage engine. So we will now go into greater details about what it means to access the storage engine. This small detour will allow us to fully understand ICP a little later, in the meantime please keep we us.

Storage Engine Accesses

Let's take the very simple query below. This query is doing a range scan on the primary key with a limit of 10. During its execution, the storage engine is accessed 10 times: once to get the row at the beginning of the range and 9 times to get the next rows (it is probably accessed more to open and close tables, but we do not need this level of detail here). We can see the matching Handler_read_% statuses generated by this query (the legacy name of the Storage Engine Architecture - the Handler Interface - explains the name of these statuses).

# The Handler_read_% statuses for a simple primary key range scan query. mysql > FLUSH LOCAL STATUS; Query OK, 0 rows affected (0.00 sec) mysql > pager cat > /dev/null; SELECT * FROM t WHERE id > 10 ORDER BY id LIMIT 10; pager; PAGER set to 'cat > /dev/null' 10 rows in set (0.00 sec) Default pager wasn't set, using stdout. mysql > SHOW STATUS LIKE 'Handler_read_%'; +-----------------------+-------+ | Variable_name | Value | +-----------------------+-------+ | Handler_read_first | 0 | | Handler_read_key | 1 | | Handler_read_last | 0 | | Handler_read_next | 9 | | Handler_read_prev | 0 | | Handler_read_rnd | 0 | | Handler_read_rnd_next | 0 | +-----------------------+-------+ 7 rows in set (0.00 sec)

For a query using an index and only returning columns from the index (index covering query), we have something very similar to the above example: the storage engine is again accessed 10 times with a little variation showing Handler_read_prev instead of Handler_read_next because of the ORDER BY v2 DESC.

# The Handler_read_% statuses for a relatively simple index query. mysql > FLUSH LOCAL STATUS; Query OK, 0 rows affected (0.00 sec) mysql > pager cat > /dev/null; SELECT id, v2, v3 FROM t WHERE v1 = 1 ORDER BY v2 DESC LIMIT 10; pager; PAGER set to 'cat > /dev/null' 10 rows in set (0.00 sec) Default pager wasn't set, using stdout. mysql > SHOW STATUS LIKE 'Handler_read_%'; +-----------------------+-------+ | Variable_name | Value | +-----------------------+-------+ | Handler_read_first | 0 | | Handler_read_key | 1 | | Handler_read_last | 0 | | Handler_read_next | 0 | | Handler_read_prev | 9 | | Handler_read_rnd | 0 | | Handler_read_rnd_next | 0 | +-----------------------+-------+ 7 rows in set (0.00 sec)

For a query using an index and returning columns that are not in the index, MySQL needs to do more work than for the two above queries. In addition to scanning an index (the primary key is an "index"), the query below needs to do primary key lookups. These lookups will happen in the storage engine just after reading each index row and before returning the data. From the point of view of the MySQL Server accessing the storage engine, these primary key lookups are hidden. So it is not possible to know that extra work has been performed for the query below, compared to the queries above, with just looking at the Handler_read_% statuses.

# The Handler_read_% statuses for a relatively simple index query # needing columns not in the index. mysql > FLUSH LOCAL STATUS; Query OK, 0 rows affected (0.00 sec) mysql > pager cat > /dev/null; SELECT * FROM t WHERE v1 = 1 LIMIT 10; pager; PAGER set to 'cat > /dev/null' 10 rows in set (0.00 sec) Default pager wasn't set, using stdout. mysql > SHOW STATUS LIKE 'Handler_read_%'; +-----------------------+-------+ | Variable_name | Value | +-----------------------+-------+ | Handler_read_first | 0 | | Handler_read_key | 1 | | Handler_read_last | 0 | | Handler_read_next | 9 | | Handler_read_prev | 0 | | Handler_read_rnd | 0 | | Handler_read_rnd_next | 0 | +-----------------------+-------+ 7 rows in set (0.00 sec)

The query above allows us to get back to the quote from the ICP documentation above. In it, when we read that ICP can reduce the number of times the storage engine must access the base table [...], the primary key lookups of the above query are such "accesses to the base table". We now have all the pieces to go back to ICP, but before that, let's look at the Handler_read_% statuses of the index covering version of the ICP query at the beginning of the post.

# The Handler_read_% statuses for the index covering version # of the ICP query at the beginning of the post. mysql > FLUSH LOCAL STATUS; Query OK, 0 rows affected (0.00 sec) mysql > SELECT id, v2 FROM t WHERE v1 = 0 AND v3 = 10; +-------+-------+ | id | v2 | +-------+-------+ | 13819 | 13819 | | 42524 | 42524 | +-------+-------+ 2 rows in set (0.00 sec) mysql > SHOW STATUS LIKE 'Handler_read_%'; +-----------------------+-------+ | Variable_name | Value | +-----------------------+-------+ | Handler_read_first | 0 | | Handler_read_key | 1 | | Handler_read_last | 0 | | Handler_read_next | 10149 | | Handler_read_prev | 0 | | Handler_read_rnd | 0 | | Handler_read_rnd_next | 0 | +-----------------------+-------+ 7 rows in set (0.00 sec)

To execute the query above, the MySQL Server accesses the storage engine 10,149 times for returning the rows matching v1 = 0, and then the Server, not the storage engine, is enforcing the v3 = 10 condition to only return 2 rows.

Back at the Index Condition Pushdown Optimization

Now that we understand the MySQL Storage Engine Architecture and the corresponding Handler_read_% statuses, we can come back to the ICP query at the beginning of the post. In below, while running this query with ICP disabled, we see that the storage engine is accessed 10,149 times, each of these calls hiding a primary key lookup, with the MySQL Server enforcing the v3 = 10 condition to only return 2 rows.

# The Handler_read_% statuses for the initial query of the post with ICP disabled. mysql > FLUSH LOCAL STATUS; Query OK, 0 rows affected (0.00 sec) mysql > SELECT /*+ NO_ICP(t) */ * FROM t WHERE v1 = 0 AND v3 = 10; +-------+----+-------+----+-------+ | id | v1 | v2 | v3 | v4 | +-------+----+-------+----+-------+ | 13819 | 0 | 13819 | 10 | 13819 | | 42524 | 0 | 42524 | 10 | 42524 | +-------+----+-------+----+-------+ 2 rows in set (0.02 sec) mysql > SHOW STATUS LIKE 'Handler_read_%'; +-----------------------+-------+ | Variable_name | Value | +-----------------------+-------+ | Handler_read_first | 0 | | Handler_read_key | 1 | | Handler_read_last | 0 | | Handler_read_next | 10149 | | Handler_read_prev | 0 | | Handler_read_rnd | 0 | | Handler_read_rnd_next | 0 | +-----------------------+-------+ 7 rows in set (0.00 sec)

In above, all but 2 primary key lookups are useless because by looking at the data in the index, we could know if the full row was needed. In this specific case, the table is not very big and fits in the InnoDB Buffer Pool, so each primary key lookup is fast because it is a cache-hit. But if the table would not fit in RAM, each of these primary key lookups would need a disk read, which would make the query much slower, and would consume many IO operations. Before ICP, the way to optimize this query was the Late Row Lookup trick. This trick consists in rewriting the query as below.

# Query with the late row lookup trick. mysql > SELECT u.* FROM (SELECT id FROM t WHERE v1 = 0 AND v3 = 10) t JOIN t u ON t.id = u.id; +-------+----+-------+----+-------+ | id | v1 | v2 | v3 | v4 | +-------+----+-------+----+-------+ | 36905 | 0 | 36905 | 10 | 36905 | | 70507 | 0 | 70507 | 10 | 70507 | +-------+----+-------+----+-------+ 2 rows in set (0.01 sec)

With the rewritten query, we first extract the primary keys (ids) of the rows we need with doing an index range scan. After that, with the help of a JOIN, we do the primary key lookups only for the needed rows. The new query is more complex, and it is also accessing the storage engine a little more than the more simple query above, but as each of the storage engine accesses on the v1_to_v3 index do not need a primary key lookup, the whole query is faster (timings below: 8.063s vs 2.366s for running the query 500 times).

# Time for running the query without ICP a few times. time yes "SELECT /*+ NO_ICP(t) */ * FROM t WHERE v1 = 0 AND v3 = 10;" | head -n 500 | ./use test_jfg > /dev/null real 0m8.063s user 0m0.037s sys 0m0.016s # Time for running the late row lookup query a few times. time yes "SELECT * FROM (SELECT id FROM t WHERE v1 = 0 AND v3 = 10) t JOIN t u ON t.id = u.id;" | head -n 500 | ./use test_jfg > /dev/null real 0m2.366s user 0m0.038s sys 0m0.014s

The late row lookup trick is achieving half of what ICP is doing. The trick reduces the number of times the storage engine must access the base table (primary key lookups), but it still needs accessing the storage engine as many times as before (actually, more accesses are needed for the primary key lookups). For doing fewer accesses, an additional condition is pushed down to the storage engine. We can see this condition by using EXPLAIN FORMAT=JSON.

# Plan of the ICP query with JSON output (rewritten for compactness). mysql > EXPLAIN FORMAT=JSON SELECT * FROM t WHERE v1 = 0 AND v3 = 10\G *************************** 1. row *************************** EXPLAIN: { "query_block": { "select_id": 1, "cost_info": { "query_cost": "2109.35" }, "table": { "table_name": "t", "access_type": "ref", "possible_keys": [ "v1_to_v3" ], "key": "v1_to_v3", "used_key_parts": [ "v1" ], "key_length": "4", "ref": [ "const" ], "rows_examined_per_scan": 18926, "rows_produced_per_join": 1892, "filtered": "10.00", "index_condition": "(`test_jfg`.`t`.`v3` = 10)", "cost_info": { "read_cost": "216.75", "eval_cost": "189.26", "prefix_cost": "2109.35", "data_read_per_join": "44K" }, "used_columns": [ "id", "v1", "v2", "v3", "v4" ] } } } 1 row in set, 1 warning (0.00 sec)

What above ICP plan means for the storage engine is:

- while looking at rows where v1 = 0 in the v1_to_v3 index,

- also filters on rows where v3 = 10,

- and on the matching rows, do a primary key lookup for returning the requested data.

When looking at the simplified plan (Using index condition in below means ICP is used) and the Handler_read_% statuses for this query, we have below.

# Simplified plan of the ICP query. mysql > EXPLAIN SELECT * FROM t WHERE v1 = 0 AND v3 = 10\G *************************** 1. row *************************** id: 1 select_type: SIMPLE table: t partitions: NULL type: ref possible_keys: v1_to_v3 key: v1_to_v3 key_len: 4 ref: const rows: 18926 filtered: 10.00 Extra: Using index condition 1 row in set, 1 warning (0.00 sec) # The Handler_read_% statuses for the above query. mysql > FLUSH LOCAL STATUS; Query OK, 0 rows affected (0.00 sec) mysql > SELECT * FROM t WHERE v1 = 0 AND v3 = 10; +-------+----+-------+----+-------+ | id | v1 | v2 | v3 | v4 | +-------+----+-------+----+-------+ | 36905 | 0 | 36905 | 10 | 36905 | | 70507 | 0 | 70507 | 10 | 70507 | +-------+----+-------+----+-------+ 2 rows in set (0.00 sec) mysql > SHOW STATUS LIKE 'Handler_read_%'; +-----------------------+-------+ | Variable_name | Value | +-----------------------+-------+ | Handler_read_first | 0 | | Handler_read_key | 1 | | Handler_read_last | 0 | | Handler_read_next | 2 | | Handler_read_prev | 0 | | Handler_read_rnd | 0 | | Handler_read_rnd_next | 0 | +-----------------------+-------+ 7 rows in set (0.01 sec)

In above, we effectively have the storage engine only being accessed 3 times. As shown below, this effectively is much faster than without ICP (1.251s vs 8.063s) and it is also faster than with the late row lookup trick (2.366s).

# Time for running the query with ICP a few times. time yes "SELECT * FROM t WHERE v1 = 0 AND v3 = 10;" | head -n 500 | ./use test_jfg > /dev/null real 0m1.251s user 0m0.028s sys 0m0.013s

But with ICP, not only can a storage engine access hides a primary key lookup, it can also hide scanning more than one row.

With Index Condition Pushdown,

a single access to the storage engine

can examine more than one row

Index Condition Pushdown Muddling the Water

After explaining ICP and the Storage Engine Architecture, we can now come back to why we have fewer than expected rows examined with an ICP query. We can make an educated guess about the reason:

When reporting rows examined,

MySQL is actually reporting

rows returned by the storage engine

Before ICP, the only operation hidden by the Storage Engine Architecture was doing primary key lookups. So before ICP, the number or rows examined by a query could be measured by counting the number of rows returned by the storage engine. However, with ICP, because a storage engine access can examine many rows, this shortcut is not valid anymore. And this explains why rows examined is not trustworthy for queries using ICP. As shown before, this problem impacts the slow log, the Performance Schema tables events_statements_summary_by_digest and table_io_waits_summary_by_table, the InnoDB metric dml_reads and the global status Innodb_rows_read.

Fixing this will need modifying the storage engine interface for exposing the number of rows examined by a storage engine access (one in most cases, more than one in the case of ICP). At the same time, it would be interesting to remove the previous blind spot of the Storage Engine Architecture: if a call did or did not do a primary key lookup. Once having these metrics, they will have to be exposed in a backward compatible way everywhere (probably new columns in the slow log, in Performance Schema tables, in InnoDB metrics, and in statuses). These are complex modifications and they will not happen quickly.

One thing you can do to get a better visibility of the impact of ICP at the global level is to enable the ICP module of the InnoDB metrics and to look at icp_no_match. From what I understand, this metric is the total number of rows examined hidden by ICP in the storage engine. By adding icp_no_match to dml_reads, the real total number of rows examined by InnoDB can be obtained.

# Monitoring the rows examined by ICP in the storage engine with InnoDB Metrics. ./use <<< "SET GLOBAL innodb_monitor_enable = module_icp"; \ sql="SELECT COUNT FROM INNODB_METRICS WHERE NAME = 'icp_no_match'"; \ c1="$(./use -N information_schema <<< "${sql}")"; \ ./use test_jfg <<< "SELECT * FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ c2="$(./use -N information_schema <<< "${sql}")"; \ echo $c2 - $c1 = $(($c2 - $c1)) 10147 - 0 = 10147

I am sorry to close this post without a good solution for rows examined at the query level. Øystein wrote it in a very clear way: "ICP muddles the water" for rows examined. The only thing you can do now is being aware of it, and not blindly trusting Rows Examined and the other impacted metrics.

Annex #1: Generating a dataset for our tests

# Create a sandbox with the slow query log enabled in a table. # Also, disabling binary logs and disk sync to make creating the dataset faster. dbdeployer deploy single mysql_8.0.28 \ -c 'slow_query_log = ON' -c 'log_output = "TABLE"' -c 'long_query_time = 0' \ -c skip_log_bin -c 'innodb_flush_log_at_trx_commit = 2' # Create the schema. ./use <<< " CREATE DATABASE test_jfg; CREATE TABLE test_jfg.t ( id INTEGER NOT NULL AUTO_INCREMENT PRIMARY KEY, v1 INTEGER NOT NULL, v2 INTEGER NOT NULL, v3 INTEGER NOT NULL, v4 INTEGER NOT NULL, KEY v1_to_v3 (v1, v2, v3))" # Insert 100,000 rows in the table with random v1 and v3 between 0 and 9 inclusive. seq 1 100000 | awk 'BEGIN{srand(0)} {print "(" int(rand()*10), $1, int(rand()*10), $1 ")"}' | tr " " "," | paste -s -d "$(printf ',%.0s' {1..100})\n" | sed -e 's/.*/INSERT into t(v1,v2,v3,v4) values &;/' | ./use test_jfg # Set v3 to 10 on 2 random rows where v1 = 0. ./use -N test_jfg <<< "SELECT id FROM t WHERE v1 = 0" | sort -R | head -n 2 | paste -s -d "," | sed -e 's/.*/UPDATE t SET v3 = 10 WHERE id IN (&)/' |

./use test_jfg

Annex #2: the Problem is also in Performance Schema and InnoDB

# The query, with truncating the digest table before and checking it after. ./use performance_schema <<< "TRUNCATE events_statements_summary_by_digest"; \ ./use test_jfg <<< "SELECT * FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ ./use performance_schema <<< " SELECT QUERY_SAMPLE_TEXT, COUNT_STAR, SUM_ROWS_SENT, SUM_ROWS_EXAMINED FROM events_statements_summary_by_digest WHERE DIGEST_TEXT like '%v1%'\G" *************************** 1. row *************************** QUERY_SAMPLE_TEXT: SELECT * FROM t WHERE v1 = 0 AND v3 = 10 COUNT_STAR: 1 SUM_ROWS_SENT: 2 SUM_ROWS_EXAMINED: 2 # Covering index variation of above. ./use performance_schema <<< "TRUNCATE events_statements_summary_by_digest"; \ ./use test_jfg <<< "SELECT v2 FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ ./use performance_schema <<< " SELECT QUERY_SAMPLE_TEXT, COUNT_STAR, SUM_ROWS_SENT, SUM_ROWS_EXAMINED FROM events_statements_summary_by_digest WHERE DIGEST_TEXT like '%v1%'\G" *************************** 1. row *************************** QUERY_SAMPLE_TEXT: SELECT v2 FROM t WHERE v1 = 0 AND v3 = 10 COUNT_STAR: 1 SUM_ROWS_SENT: 2 SUM_ROWS_EXAMINED: 10149 # The query, with truncating the table_io_waits table before and checking it after. ./use performance_schema <<< "TRUNCATE table_io_waits_summary_by_table"; \ ./use test_jfg <<< "SELECT * FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ ./use performance_schema <<< " SELECT COUNT_STAR FROM table_io_waits_summary_by_table WHERE OBJECT_SCHEMA = 'test_jfg' AND OBJECT_NAME = 't'\G" *************************** 1. row *************************** COUNT_STAR: 2 # Covering index variation of above. ./use performance_schema <<< "TRUNCATE table_io_waits_summary_by_table"; \ ./use test_jfg <<< "SELECT v2 FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ ./use performance_schema <<< " SELECT COUNT_STAR FROM table_io_waits_summary_by_table WHERE OBJECT_SCHEMA = 'test_jfg' AND OBJECT_NAME = 't'\G" *************************** 1. row *************************** COUNT_STAR: 10149 # The query, with computing the delta of dml_read before and after. ./use <<< "SET GLOBAL innodb_monitor_enable = dml_reads"; \ sql="SELECT COUNT FROM INNODB_METRICS WHERE NAME = 'dml_reads'"; \ c1="$(./use -N information_schema <<< "${sql}")"; \ ./use test_jfg <<< "SELECT * FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ c2="$(./use -N information_schema <<< "${sql}")"; \ echo $c2 - $c1 = $(($c2 - $c1)) 2 - 0 = 2 # Covering index variation of above. ./use <<< "SET GLOBAL innodb_monitor_enable = dml_reads"; \ sql="SELECT COUNT FROM INNODB_METRICS WHERE NAME = 'dml_reads'"; \ c1="$(./use -N information_schema <<< "${sql}")"; \ ./use test_jfg <<< "SELECT v2 FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ c2="$(./use -N information_schema <<< "${sql}")"; \ echo $c2 - $c1 = $(($c2 - $c1)) 10151 - 2 = 10149 # The query, with computing the delta of Innodb_rows_read before and after. sql="SHOW GLOBAL STATUS LIKE 'Innodb_rows_read'"; \ c1="$(./use -N <<< "${sql}" | awk '{print $2}')"; \ ./use test_jfg <<< "SELECT * FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ c2="$(./use -N <<< "${sql}" | awk '{print $2}')"; \ echo $c2 - $c1 = $(($c2 - $c1)) 60906 - 60904 = 2 # Covering index variation of above. sql="SHOW GLOBAL STATUS LIKE 'Innodb_rows_read'"; \ c1="$(./use -N <<< "${sql}" | awk '{print $2}')"; \ ./use test_jfg <<< "SELECT v2 FROM t WHERE v1 = 0 AND v3 = 10" > /dev/null; \ c2="$(./use -N <<< "${sql}" | awk '{print $2}')"; \ echo $c2 - $c1 = $(($c2 - $c1)) 71055 - 60906 = 10149

Annex #3: Running the Query with the Right Index

# Create two new tables, with the 2nd having the right index, and fill them both. ./use test_jfg <<< " CREATE TABLE t1 LIKE t; CREATE TABLE t2 LIKE t; ALTER TABLE t2 ADD KEY v1_n_v3 (v1, v3); INSERT INTO t1 SELECT * FROM t; INSERT INTO t2 SELECT * FROM t" # Time running the query a few times without and with the right index. time yes "SELECT * FROM t1 WHERE v1 = 0 AND v3 = 10;" | head -n 1000 | ./use test_jfg > /dev/null real 0m2.585s user 0m0.058s sys 0m0.028s time yes "SELECT * FROM t2 WHERE v1 = 0 AND v3 = 10;" | head -n 1000 | ./use test_jfg > /dev/null real 0m0.251s user 0m0.044s sys 0m0.010s

No comments:

Post a Comment